Robust fusion of colour and depth data for RGB-D target tracking using adaptive range-invariant depth models and spatio-temporal consistency constraints

Jingjing Xiao, Rustam Stolkin, Yuqing Gao, AlesˇLeonardis

Abstract

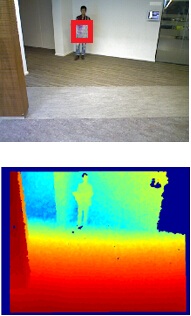

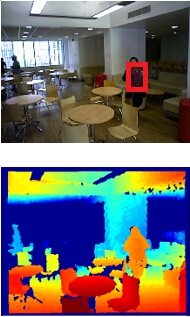

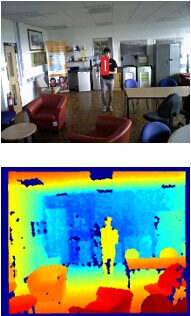

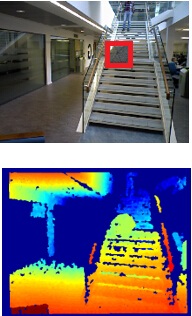

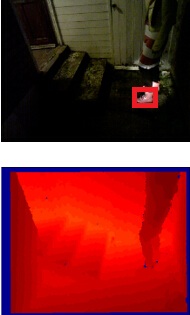

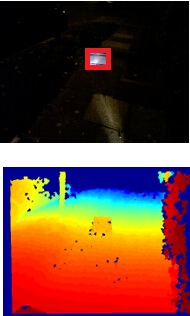

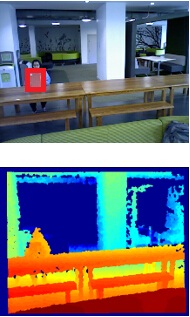

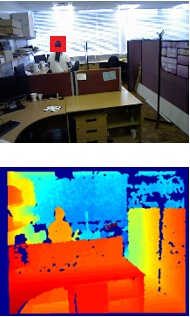

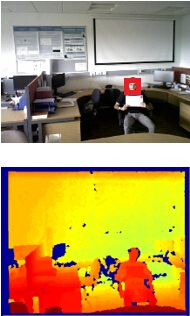

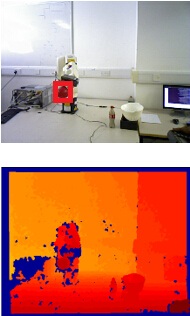

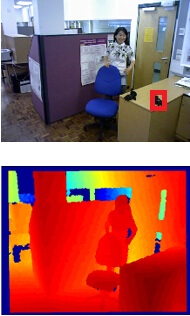

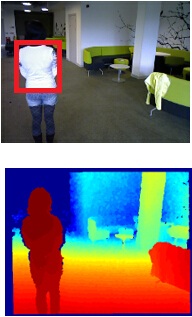

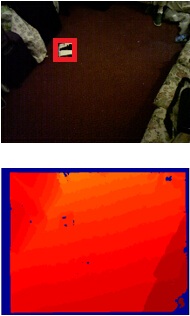

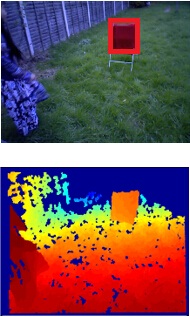

This paper presents a novel robust method for single target tracking in RGB-D images, and also contributes a substantial new benchmark dataset for evaluating RGB-D trackers.While a target object’s colour distribution is reasonably motioninvariant,this is not true for the target’s depth distribution,which continually varies as the target moves relative to the camera. It is therefore non-trivial to design target models which can fully exploit (potentially very rich) depth information for target tracking. For this reason, much of the previous RGBD literature relies on colour information for tracking, while exploiting depth information only for occlusion reasoning. In contrast, we propose an adaptive range-invariant target depth model, and show how both depth and colour information can be fully and adaptively fused during the search for the target in each new RGB-D image. We introduce a new, hierarchical,two-layered target model (comprising local and global models) which uses spatio-temporal consistency constraints to achieve stable and robust on-the-fly target relearning. In the global layer, multiple features, derived from both colour and depth data, are adaptively fused to find a candidate target region. In ambiguous frames, where one or more features disagree, this global candidate region is further decomposed into smaller local candidate regions for matching to local-layer models of small target parts. We also note that conventional use of depth data, for occlusion reasoning, can easily trigger false occlusion detections when the target moves rapidly towards the camera. To overcome this problem, we show how combining target information with contextual information enables the target’s depth constraint to be relaxed. Our adaptively relaxed depth constraints can robustly accommodate large and rapid target motion in the depth direction, while still enabling the use of depth data for highly accurate reasoning about occlusions. For evaluation, we introduce a new RGB-D benchmark dataset with per-frame annotated attributes and extensive bias analysis. Our tracker is evaluated using two different state-of-the-art methodologies, VOT [1] and VTB[2], and in both cases it significantly outperforms four other state-of-the-art RGB-D trackers from the literature.Index Terms—RGB-D tracking, range-invariant depth models,clustered decision tree. [github link]

Share your results for more citations! Please email to: shine636363@sina.com

Dataset

Results

| Overall | Stationary | Moving | IV | DV | SV | CDV | DDV | SDC | SCC | BCC | BSC | PO | fps | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | 8.36 | 9.57 | 7.18 | 5.78 | 7.56 | 5.07 | 5.12 | 7.66 | 8.01 | 9.53 | 6.67 | 7.17 | 7.73 | 14.73 |

| DS-KCF*[3] | 8.21 | 9.36 | 7.13 | 6.10 | 7.88 | 4.39 | 0.94 | 5.26 | 7.93 | 9.81 | 5.66 | 6.50 | 7.76 | 17.89 |

| PT [4] | 7.44 | 8.54 | 6.43 | 4.15 | 6.65 | 2.80 | 0.43 | 3.48 | 6.79 | 8.23 | 5.74 | 5.76 | 6.20 | 0.14 |

| DS-KCF[5] | 7.23 | 7.52 | 6.85 | 5.50 | 7.06 | 3.36 | 1.43 | 4.16 | 7.89 | 8.25 | 4.82 | 5.16 | 6.02 | 20.95 |

| OAPF[6] | 5.24 | 6.0 | 4.54 | 3.19 | 4.45 | 3.07 | 3.22 | 3.71 | 5.00 | 6.13 | 3.79 | 4.82 | 5.82 | 1.30 |

Table 1 AUC OF BOUNDING-BOX OVERLAP

| Overall | Stationary | Moving | IV | DV | SV | CDV | DDV | SDC | SCC | BCC | BSC | PO | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | Fail. | 2.11 | 1.56 | 2.67 | 0.11 | 0.50 | 0.19 | 0.00 | 0.25 | 0.97 | 0.22 | 1.17 | 0.67 | 0.50 |

| Acc. | 0.52 | 0.54 | 0.50 | 0.41 | 0.49 | 0.41 | 0.46 | 0.47 | 0.52 | 0.52 | 0.49 | 0.50 | 0.46 | |

| DS-KCF*[3] | Fail. | 2.69 | 2.50 | 2.89 | 0.06 | 0.78 | 0.22 | 0.03 | 0.36 | 1.44 | 0.69 | 1.67 | 1.28 | 0.58 |

| Acc. | 0.50 | 0.51 | 0.50 | 0.48 | 0.49 | 0.46 | 0.41 | 0.53 | 0.44 | 0.43 | 0.57 | 0.57 | 0.49 | |

| PT [4] | Fail. | 10.61 | 8.61 | 12.61 | 0.33 | 3.14 | 1.42 | 0.19 | 1.03 | 5.78 | 2.33 | 4.31 | 3.83 | 3.61 |

| Acc. | 0.64 | 0.63 | 0.64 | 0.53 | 0.65 | 0.55 | 0.60 | 0.65 | 0.62 | 0.62 | 0.64 | 0.63 | 0.57 | |

| DS-KCF[5] | Fail. | 3.36 | 3.00 | 3.72 | 0.08 | 0.64 | 0.28 | 0.03 | 0.31 | 1.39 | 0.92 | 1.56 | 1.47 | 1.11 |

| Acc. | 0.56 | 0.56 | 0.47 | 0.50 | 0.56 | 0.48 | 0.54 | 0.58 | 0.55 | 0.52 | 0.53 | 0.51 | 0.54 | |

| OAPF[6] | Fail. | 5.78 | 3.72 | 7.83 | 0.19 | 1.42 | 0.50 | 0.11 | 0.61 | 2.75 | 1.36 | 2.61 | 1.86 | 1.47 |

| Acc. | 0.35 | 0.37 | 0.33 | 0.25 | 0.32 | 0.25 | 0.22 | 0.27 | 0.35 | 0.36 | 0.32 | 0.35 | 0.33 | |

Table 2 VVOT PROTOCOL RESULTS

Acknowledgement

This work was funded by EU H2020 RoMaNS 645582. It was also partially supported by EPSRC EP/M026477/1 and the National Key Research and Development Program of China,No. 2016YFC0103100. We also acknowledge MoD/Dstl and EPSRC for providing the grant to support the UK academics (Aleˇs Leonardis) involvement in a Department of Defense funded MURI project. We also want to thank for the great help from Heng Yang for the insightful discussions.

Reference